How we evaluate applicants for positions in our group

After we’ve decided to hire, constructed the position description, and posted it for about a month, we’ve got to evaluate the applicants.

While constructing the job ad, we think about what attributes we are looking for in the person that we’ll hire. As we mentioned in our last newsletter, we require excellent communication skills (both verbal and written). We’re looking for someone with solid scientific fundamentals, and who is an independent problem solver. We want someone that values diversity and has evidence of this in their past work. Finally, there will be some basal level of scientific knowledge that they’ll need to have to be a productive member of our group.

We don’t care why they got a ‘C’ in calculus. (Hey, I earned a C in calculus after failing it once!) We don’t count publications. We don’t scrutinize impact factors. We don’t care where the applicants learned how to do research or where they got their degree. None of these things are reliable predictors of whether they’ll be able to work well with us or their future success.

The five categories everyone is evaluated against are:

Are you an effective communicator (written)?

Do you understand the scientific process?

Do you display independence?

Do you value diversity?

Do you have some technical skills?

That’s it. We construct a rubric around these five categories and look for evidence of each of these in the applications. The nuances associated with what we are looking for might change from job to job, but the broad themes are the same. For example, we wouldn’t expect an undergraduate to have anaerobic cultivation experience if they were applying for a position that would be working with anaerobes. But we would score that same individual highly if they had taken an introductory microbiology lab course where they learned aseptic technique and how to use a micropipette. An example of one of our previous rubrics is below.

The specific scoring scheme has evolved over the last several hires. Moving forward, we’ll be weighing each category equally on a scale of 1-5 where 1 is no evidence, 3 is some evidence, and 5 is clear evidence (scores of 2 & 4 represent intermediate values). By weighing each category equally, we allow for multiple ways for applicants to score highly.

Blind evaluations

Once the application period closes, we’ll go through the applications and redact all names, grades, and other identifying information. The applications are assigned numbers “Applicant 1, Applicant 2, etc.”. This is how we refer to each application until the interview stage. That way we minimize or eliminate the chance that a name or a school might bias the way we evaluate the candidate.

Evaluation is not unilateral

Every Carini lab member evaluates each candidate. Every position. Every time. The lab group will have to work with the new hire. They’ll need to get along. They’ll need to fight over bench space. They’ll need to lean on each other.

Therefore, we all evaluate each candidate using a rubric and our own intuition. This approach has benefits across several tiers. First, junior lab members have an opportunity to evaluate the application materials that come in. This gives them the chance to distinguish between high quality application materials and those of lower caliber. This is invaluable for undergraduates applying to graduate school or for jobs elsewhere. Second, every person in the lab is vested in the process—they each have an equal vote in who we hire. Third, when we find the right person, we’re sure there is a consensus among us that we hired the best person that applied.

The evaluation process can be challenging. We ask for applicants to “submit an application that speaks to this position.” Because of these open-ended instructions of what an application packet consists of, we get a diversity of responses that ranges from a cover letter, a narrative, or a resume (or some combination of them). That makes evaluating the applications difficult because different applicants submit different materials. We provide minimal guidance to evaluators on how to score the applicants based on the rubric. It’s up to the evaluator, so long as they score consistently. I often reccomend that the evaluators read 3-5 applications before scoring to get a feel for the diversity of applications. There is no “right way” for lab members to score the applications. Each of us weighs things slightly differently. And this is how we want to keep it. Yet, thus far, we all usually agree on which applications are the strongest.

Ranking the candidates

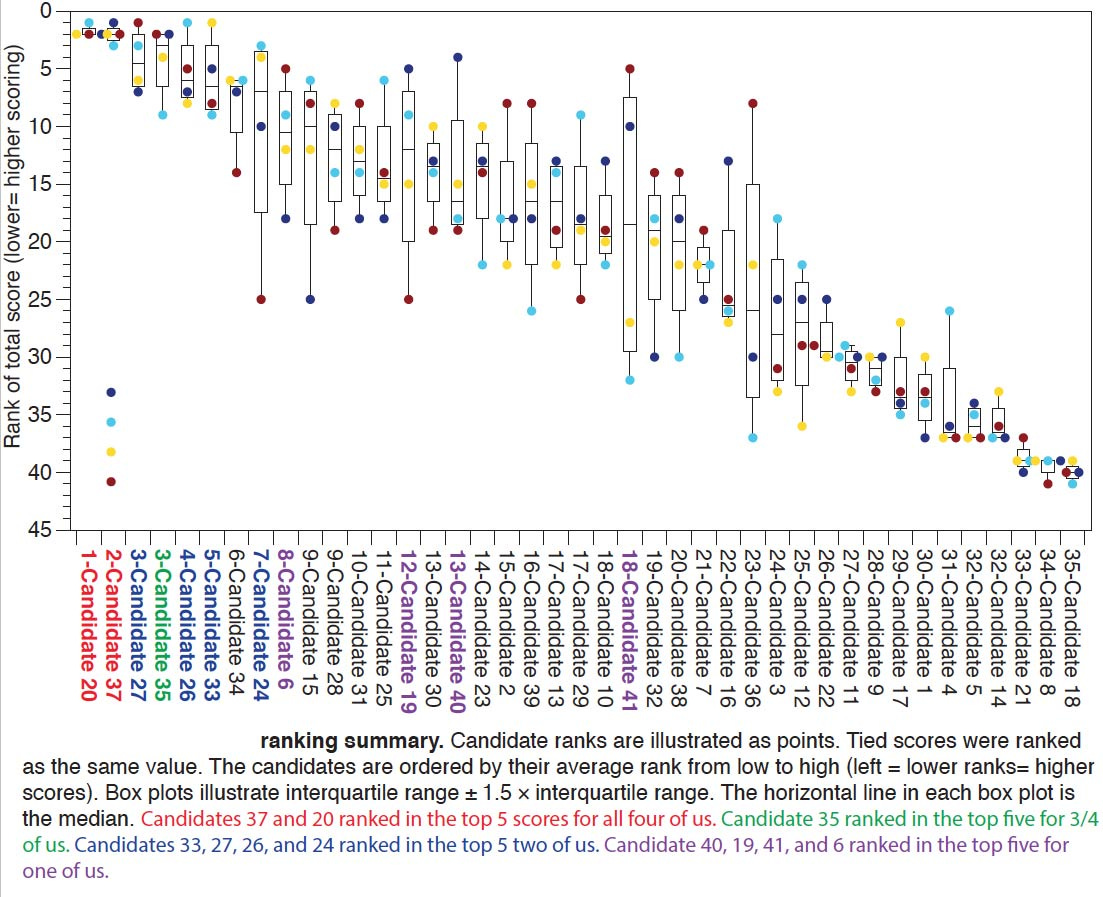

Once everyone has completed scoring the candidates, we rank each lab member’s scores (ties are averaged) and generate a box plot of the ranks of the scores (See below). This is the exciting point where our hard work pays off and the best candidates come to light! Typically, we have 3-5 high scoring applicants that we all scored highly (upper left in the figure below), followed by a large group of applicants that there was no consensus on. And finally, a few applicants that we all thought didn’t meet our standards.

We’ll have a face-to-face meeting to discuss which applicants we’ll want to interview. We aim for interviewing the top 4-5 candidates. However, we have one ‘wild-card’ interview spot where lab members can make the case for interviewing someone who was not among the highest ranked candidates. The catch is that we all must agree on one candidate for this wild card interview spot, or it doesn’t get activated.

Wow, that’s a lot of work!

One of the common critiques of this approach is that this is a lot of work to go through for every hire. And it is. We each spend about a day’s worth of our time evaluating the candidates. But in the long run it pays off. Clear communication results in fewer headaches stemming from misunderstandings. People who are good at self managing are more productive in our lab group—but we’re here to lend a hand when needed. It’s an up-front investment in what we hope is a long-term productive relationship.

Next newsletter, we’ll close this series (Part 1, Part 2, Part 3) out with a discussion of how we interview and select the applicant.

If you are enjoying these newsletters, please subscribe for free below, like & share with your friends and colleagues.